Author: Levi

The release of ChatGPT and OpenAI last year triggered widespread interest in AI applications, but it also resulted in “AI-washing,” with projects exploiting the AI hype for token pumping, misleading investors and undermining genuine AI projects.

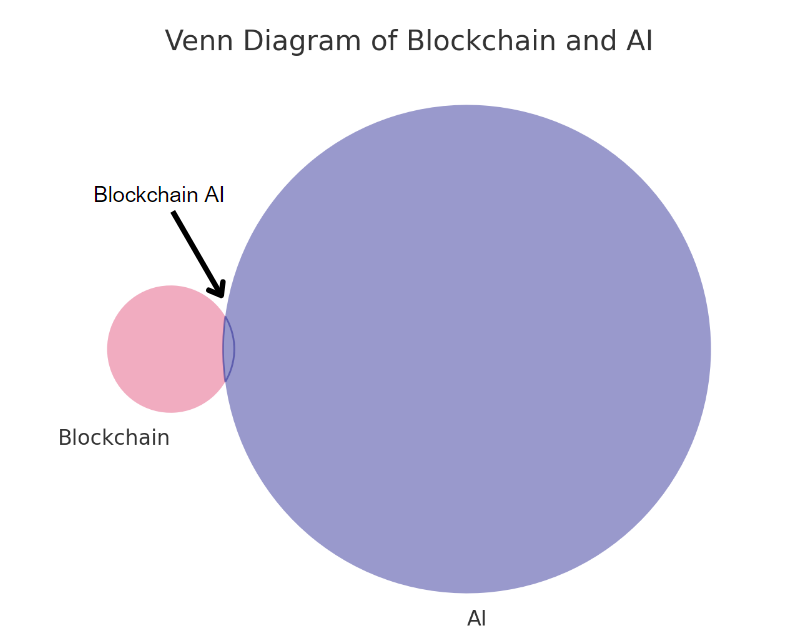

AI and Web3 are distinct technologies. AI requires centralized processing power and massive data for training, while Web3 operates on a decentralized principle. However, some still believe that AI enhances productivity and blockchain transforms production relationships, leading to efforts to combine the two.

Integrating AI and Web3 presents challenges, yet the potential benefits drive exploration. The convergence of blockchain and AI could unlock new business models, operational efficiencies, task automation, secure data exchange, and improved trust in economic processes.

The purpose of this article is to introduce a variety of combinations between AI and Web3. Notably, AI has been used to generate much of the content, even the title.

Since both AI and blockchain are still in their early stages, I’m certain that I won’t be able to address all the developments occurring in this field. I highly appreciate any feedback or corrections if there are any inaccuracies or missing details in the content provided.

I’ve put together a compilation of articles that I referenced in section 4, and they offer more in-depth research. Feel free to explore them if you’re interested.

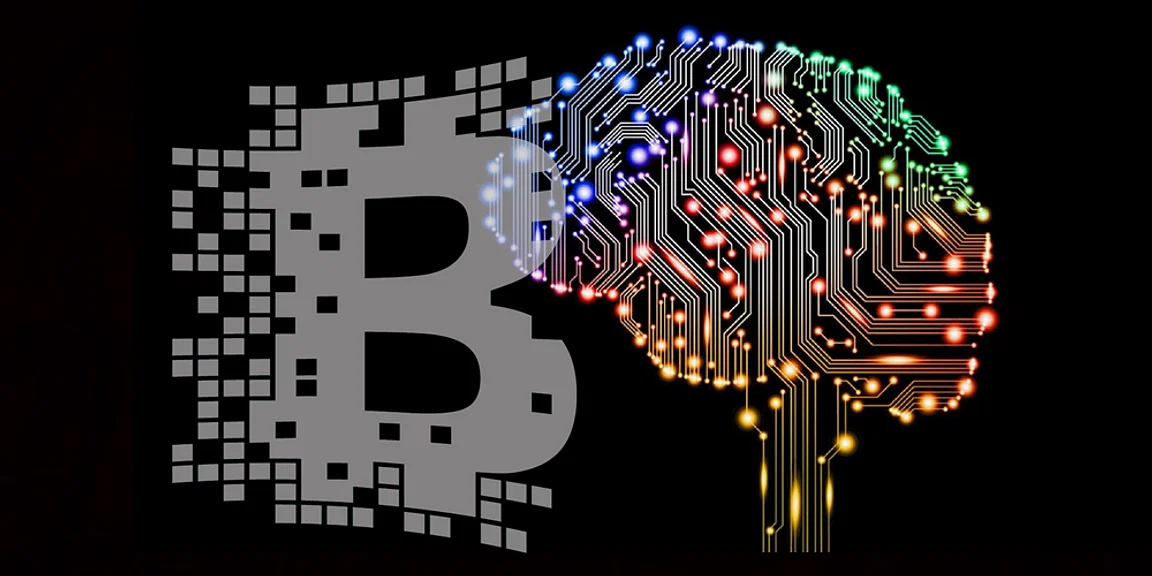

1. Total market share

Source: https://www.precedenceresearch.com/blockchain-technology-market

The global blockchain technology market size was estimated at USD 5.7 billion in 2021 and is extending to around USD 1,593.8 billion by 2030, poised to grow at a compound annual growth rate (CAGR) of 87.1% during the forecast period 2022 to 2030.

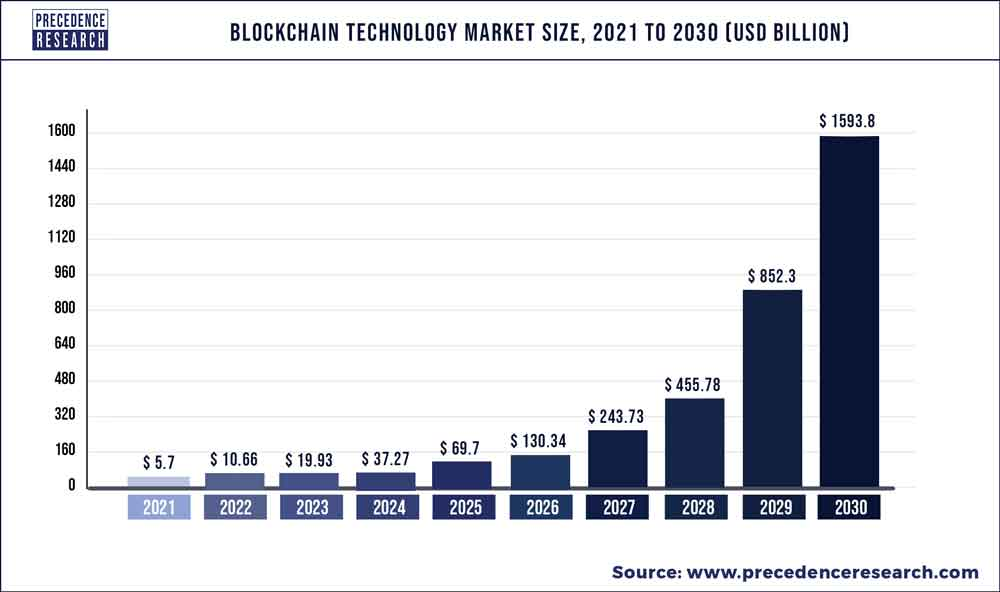

The global artificial intelligence (AI) market was valued at around USD 87.04 billion in 2021 and is expected to reach about USD 1,597.1 billion by 2030, with a projected CAGR of 38.1% from 2022 to 2030.

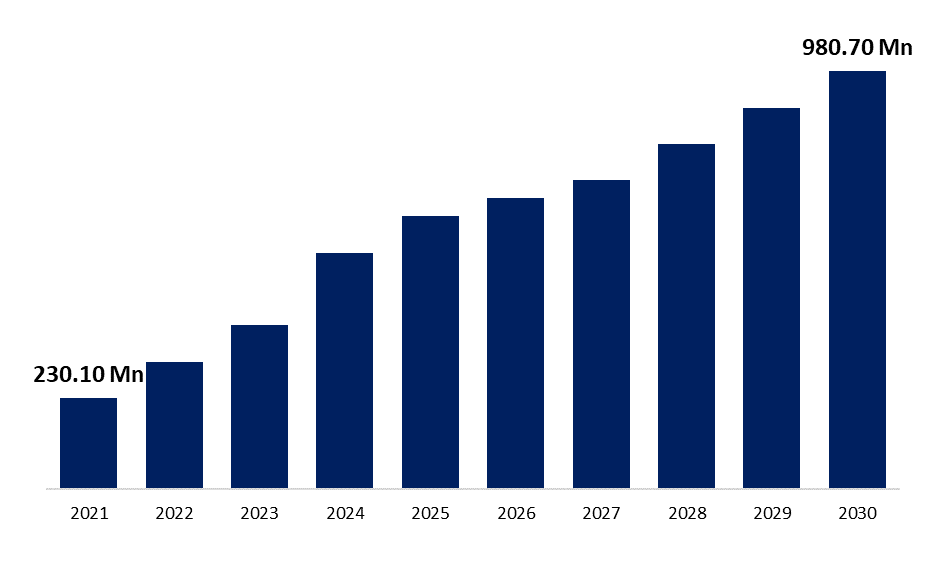

Source: https://www.sphericalinsights.com/reports/blockchain-ai-market

In 2021, the Blockchain AI Market was valued at USD 230.10 million, with a projected growth of USD 980.70 million by 2030, representing a CAGR of 24.06%.

The significantly smaller size of the Blockchain AI market in 2021, when compared to the individual markets of blockchain and AI, can be attributed to a variety of factors:

- New and Complex Tech: Both blockchain and AI are emerging and intricate technologies. Their integration is still in early stages, resulting in a smaller market compared to established tech.

- Limited Awareness: Businesses and tech sectors might not have fully grasped the synergies between blockchain and AI. Industries were exploring the benefits of combining these technologies.

- Technical Hurdles: Merging blockchain and AI involves overcoming challenges like interoperability, scalability, data privacy, and security, which slowed adoption and growth.

- Regulatory Uncertainty: The absence of standardized regulations for blockchain and AI limited adoption and investment.

- R&D and Investment: Research and investments were increasing to explore this convergence, but it takes time to yield mature solutions.

- Developing Use Cases: Time and experimentation were needed to create practical use cases leveraging both technologies, leading to increased adoption as successful cases emerged.

Key Players:

- Cyware

- NeuroChain Tech

- SingularityNET

- Core Scientific, Inc.

- etObjex

- Fetch.ai

- Chainhaus, Inc.

- Hannah Systems

- BrustIQ

- VYTALYX

- Gainfy Healthcare Network

- AlphaNetworks

- Others

2. Combination of AI and Web3

2.1. ”Decentralized AI”

AI development relies on three fundamental components: algorithms (models), computing power, and data.

- Algorithms (models) define how AI systems process and interpret information.

- Data serves as the foundational input that allows AI models to learn and make informed decisions.

- Computing power provides the necessary resources for performing complex computations required for AI tasks.

Web3, commonly known as decentralized, lays significant importance on data privacy, ownership, and independence. Integrating AI with Web3 aligns with its decentralized principles, leading to the natural pursuit of “decentralizing” AI. This involves decentralizing the three core elements of AI: algorithms, computing power, and data.

2.1.1. Decentralization of Algorithms (models):

In a decentralized AI system, algorithms can be collaboratively developed and maintained by a network of participants. Smart contracts and DAOs can be utilized to collectively make decisions about algorithm updates, enhancements, and optimizations. Moreover, employing smart contracts and DAOs can establish a means to guarantee the proper utilization of AI algorithms. Rules and conditions can be encoded into the blockchain, preventing the algorithm from being utilized for malicious or unethical activities.

Kaggle is a widely recognized online platform that hosts data science competitions, offers datasets for exploration and analysis, and provides a space for collaboration and learning in the field of artificial intelligence and machine learning.

While currently not utilizing blockchain, Kaggle could adopt decentralized governance like DAOs. This empowers the community to collaboratively shape AI model progress. Contributors could retain ownership of the models they develop while allowing others to build upon and improve them. Smart contracts could facilitate revenue-sharing agreements among contributors who collaborate on a model’s development. Community members could then vote on these proposals using blockchain-based voting systems. This ensures AI model evolution on Kaggle is consensus-driven, not solely controlled by administrators.

2.1.2. Decentralization of Data

In the current situation, machine learning models are created through the analysis of substantial volumes of publicly accessible text and data. However, a limited group of individuals currently hold control and ownership over these data. The central question extends beyond the potential value of artificial intelligence; it revolves around establishing these systems in a manner that ensures economic benefits are accessible to all those who interact with them. Additionally, it is crucial to consider the structure of these systems in a way that empowers individuals to safeguard their privacy rights should they choose to share their data.

The decentralization of data has led to the significant interest in federated learning. This approach allows ML models to be trained on data stored across separate nodes without relying on a central server, making it valuable for various IoT applications. This approach could also reduce the risk of single points of failure and censorship.

Decentralized web3 technologies thrive on incentivization. Blockchain rewards validator nodes and miners with native crypto tokens for network maintenance. Users who contribute digital data for service or ML improvement are also incentivized in Web3.

Brave browser, known for privacy-focused features, rewards users with BAT tokens for viewing opt-in ads. Brave uses privacy-preserving machine learning and federated learning to predict user click behavior.

Ocean Protocol is a decentralized data exchange protocol that supports data sharing and monetization while ensuring privacy and control for data providers. The emergence of specialized AI data markets based on Ocean signifies the increasing interest in data-driven AI solutions in the web3 space.

2.1.3. Decentralization of Computing Power:

The development and deployment of AI models involve two key processes:

- Training: Teaching the AI model to learn from labeled data to improve its ability to make accurate predictions on unseen examples.

- Inference/Reasoning: Using the trained AI model to make predictions on new, unseen data in real-world applications.

The demand for significant computing power in AI applications, particularly for training and running complex models like ChatGPT using GPUs, has led to a shortage despite a surplus of these resources. Factors such as limited availability, specific AI capabilities, infrastructure constraints, and rapid AI advancements contribute to this unique situation. As a result, a new market has emerged where individuals and organizations can lease their idle GPU resources to meet the needs of AI researchers and developers, helping address the shortage and support the continuous growth of artificial intelligence.

Training large AI models demands significant computational resources, typically centralized in data centers, while reasoning tasks can be more easily decentralized and distributed. Some projects specialize in training, others in efficient inference using distributed resources. This may lead to a collaborative ecosystem with specialized projects, or end-to-end AI solutions from leading projects for a seamless user experience.

Together secured $10 million in seed funding, and Gensyn raised $43 million in the series A round led by a16z. Render Network, originally focused on 3D rendering, has extended its GPU network to support AI inference. Furthermore, this sector includes other players like Anyscale, Akash, BitTensor, Prodia, and more.

2.2. Authenticity Verification

Deep learning models like DALL-E, Stable Diffusion, and Midjourney have demonstrated the potential to generate various media forms from natural language or other inputs. While they offer productivity and creativity benefits, there are concerns about misuse for spreading misinformation and creating misleading content.

Blockchain’s cryptographic validation and timestamping can address authenticity challenges in media content. Decentralized platforms using this technology can empower content creators and users to ensure unaltered and trustworthy information propagation, differentiating between AI and human-generated content for societal stability.

Blockchain tokens, particularly non-fungible tokens (NFTs), can provide a solution for verifying the authenticity and provenance of digital content. NFTs, as unique digital assets, represent ownership and trace the history of various media forms. By assigning NFTs to content, creators establish a transparent digital fingerprint, promoting accountability for online content and empowering users to discern genuine from tampered content.

2.3. Buidling Engine

AI-assisted tools like GitHub Copilot increase the productivity of smart contract developers, while AI-powered APIs in smart contract applications lead to a new generation of Web3 applications. The introduction of CoPilot, using OpenAI’s language model, has impacted Stack Overflow’s traffic as it allows developers to generate code functions automatically, reducing the need to search for solutions on the website.

In the context of smart contracts, auditing refers to reviewing smart contracts and protocols to identify vulnerabilities and risks, ensuring platform security. Those AI auditors might eventually outperform their human users, but currently, they serve as potent tools to enhance existing audit processes. Researchers have achieved noteworthy progress, detecting vulnerabilities in under a second with 97% accuracy. This highlights the potential of these AI models to greatly improve the thoroughness and effectiveness of security audits.

Conor, director at Coinbase shared his experiment on Twitter, where he tested Ethereum smart contract through GPT-4.

“In an instant, it highlighted a number of security vulnerabilities and pointed out surface areas where the contract could be exploited. I believe that AI will ultimately help make smartcontracts safer and easier to build, two of the biggest impediments to mass adoption.”

AI also revolutionizes gaming, enabling effortless creation of new experiences by generating entire game worlds, assets, characters, and scripted events through natural language. Unity recently unveiled AI-generating tools, Unity Sentis and Unity Muse, in limited beta. With their impending official release next year, these tools exemplify AI’s increasing role in gaming. They empower developers to streamline game development, tapping into cutting-edge technology and unleashing innovative possibilities for gamers worldwide.

By simplifying the process and reducing barriers to entry, the introduction of these tools could foster a wider range of AIGC projects. Developers can now explore AI-driven game development without solely relying on complex algorithms and data-intensive models. As a result, we may witness an increase in AI-powered experiences and features in web3 gaming.

2.4 Intelligent Defi Solutions

The incorporation of artificial intelligence into decentralized finance has the potential to give rise to intelligent DeFi solutions. Intelligent DeFi solutions leverage AI and machine learning techniques to enhance various aspects of the DeFi ecosystem. Here are a few ways in which AI can be applied to DeFi:

2.4.1. Fraud Detection

DeFi platforms involve the exchange of digital assets and tokens, making them susceptible to various forms of fraudulent activities such as phishing, spoofing, rug pulls, and more. AI can play a significant role in detecting and preventing these fraudulent behaviors.

Chainalysis, a company specializing in blockchain analytics, employs AI to analyze transaction data and detect suspicious activities. Law enforcement agencies utilize Chainalysis software to trace individuals involved in crimes related to cryptocurrencies.

TRM Labs uses AI to assign risk scores to each transaction and observe user behavior, enabling the identification of potential fraudulent actions.

2.4.2. Credit Scoring

AI has the potential to streamline credit evaluation in DeFi. By analyzing user data, repayment history, and financial behavior, AI algorithms can determine a borrower’s creditworthiness and appropriate interest rates. This decentralized approach ensures impartial and transparent credit assessment for all participants.

In November 2021, Credit DeFi Alliance (CreDA) launched a credit rating service using the CreDA Oracle and AI to determine a user’s creditworthiness through data from various blockchains.

2.4.3. Trading Strategy and Trading Bots

AI-powered tools can analyze market trends, sentiment, and liquidity data to provide valuable insights to DeFi traders and investors. This assists in making more informed decisions and optimizing trading strategies.

AI-driven trading bots handle trading tasks and execute transactions based on predefined strategies. They continuously assess real-time market data, including price shifts, trading volumes, and order book information. By utilizing machine learning, they identify optimal trade entry and exit points, helping traders maximize profits and minimize risks.

In DeFi trading, successful strategies can lose effectiveness as more people adopt them, known as “strategy decay.” Increased usage leads to reduced profitability. Traders and AI robots must consistently adapt and switch strategies for a competitive edge. The future success of AI trading robots is uncertain, and their position among traders hinges on their adaptability and ability to outperform traditional methods.

KyberAI by Kyber Network is a machine learning tool for traders. It covers 4,000 tokens across seven chains, showing real-time KyberScores for bullish or bearish trends. It analyzes on-chain activities, trade volumes, and technical aspects.

2.4.4. Portfolio Management

Blockchain applications are inherently composable, meaning they can be combined to create complex interconnected loops of financial transactions. AI models can leverage this composability to carry out sophisticated financial strategies without the limitations of a paper-based financial system.

AI-driven tools analyze performance, trends, and risk, offering personalized asset allocation. They adapt portfolios to market shifts, potentially improving returns. These AI algorithms assist in DeFi portfolio management, generating personalized investment advice by considering risk tolerance, goals, market conditions, and historical data.

In the traditional financial markets, many financial institutions have been creating their AI bots for years. These bots, although not connected to blockchain, have even reached a level of maturity.

In a shareholder letter from 2022, JPMorgan Chase’s CEO Jamie Dimon hinted at the growing significance of AI for the company:

“AI has already added significant value to our company. For example, in the last few years, AI has helped us to significantly decrease risk in our retail business (by reducing fraud and illicit activity) and improve trading optimization and portfolio construction (by providing optimal execution strategies, automating forecasting and analytics, and improving client intelligence).”

2.5 ZKML

Blockchain networks operate under unique economic constraints. Developers are encouraged to optimize their code for on-chain execution to avoid excessive computational costs. This emphasis on optimization drives the demand for efficient proof systems like SNARKs. Blockchain networks have spurred the optimization of zk-SNARKs, with positive effects beyond just blockchain applications. These efficiency gains can extend to diverse computing areas, including machine learning.

2.5.1. Model Authenticity:

Functional commitments are cryptographic techniques that let one party prove the outcome of a function’s operation without disclosing the function itself or its inputs.

💡 Functional commitments in simple terms: Imagine having a locked black box that carries out a particular task. You wish to convince someone that this box consistently produces the same output for the same input. Yet, you’re unwilling to unveil the inner things of the box (intricate algorithms and private information)

ZKPs and functional commitments work together to verify computations while keeping data private. Functional commitments securely commit to values, which ZKPs validate without revealing specifics. Functional commitments ensure result consistency, while ZKPs confirm correctness, all while safeguarding data privacy.

ZKML verifies that a service provider delivers the promised model, crucial for Machine Learning as a Service (MLaaS). It ensures that the API-accessed model is genuine, preventing fraudulent substitutions. For instance, a user who pays for access to a specific advanced MLaaS through an API might want to ensure that the service provider is not secretly swapping the model with a less capable one.

The process works like this:

- Commitment: The service provider commits to the model’s outputs for a given set of inputs using a functional commitment scheme.

- Zero-Knowledge Proof Generation: The provider generates a proof using technologies like SNARKs to demonstrate that the committed model outputs are correct for the inputs.

- Verification: The recipient of the model’s predictions (the user) can then verify the provided proof. This verification step ensures that the model generates dependable predictions as stated by the provider, all while preserving the confidentiality of the model itself.

2.5.2. Model Integrity:

ZKPs can be employed to ensure the uniform application of a single machine learning algorithm to diverse users’ data, all the while safeguarding privacy and circumventing biases. This proves especially valuable in sensitive domains like credit evaluation, where the avoidance of biases is crucial. Functional commitments can be utilized to validate that the model was executed using the committed parameters for the data of each user.

For example, a medical diagnostics system uses patient data for accurate disease predictions, aiding doctors’ decisions without compromising privacy or introducing biases. The algorithm is trained on varied data to reduce biases, while ZKP protocol and functional commitments guarantee unbiased predictions solely from patient data.

2.5.3. Model Evaluation

Businesses and organizations can use ZKML to demonstrate a machine learning model’s accuracy without revealing its parameters. Buyers can then verify the model’s performance on a randomly chosen test set before purchasing, ensuring that they are investing in a legitimate and effective product.

Kaggle is a platform where data scientists and machine learning practitioners compete to create the best predictive models for various tasks. However, revealing the specific details of a winning model can be problematic due to intellectual property concerns or the desire to keep proprietary methods hidden. Utilizing ZKML in decentralized Kaggle-style competitions empowers participants to display the accuracy of their models while ensuring the protection of the intricate details of their models and weights.

2.5.4. Model Minimization

In the context of producing zero-knowledge proofs for the ML model, my focus is exclusively on inference, because while both training and inference involve computational processing, the inference phase is generally less demanding in terms of computational power compared to the training phase.

Demonstrating the inference of machine learning models using zero-knowledge proof systems is expected to become a major breakthrough in the technology sector for this decade. Machine learning models, especially deep neural networks, can have a large number of parameters, requiring significant computational resources. Zero-knowledge proofs compress these models, reducing size while maintaining accurate predictions without revealing the data or the models.

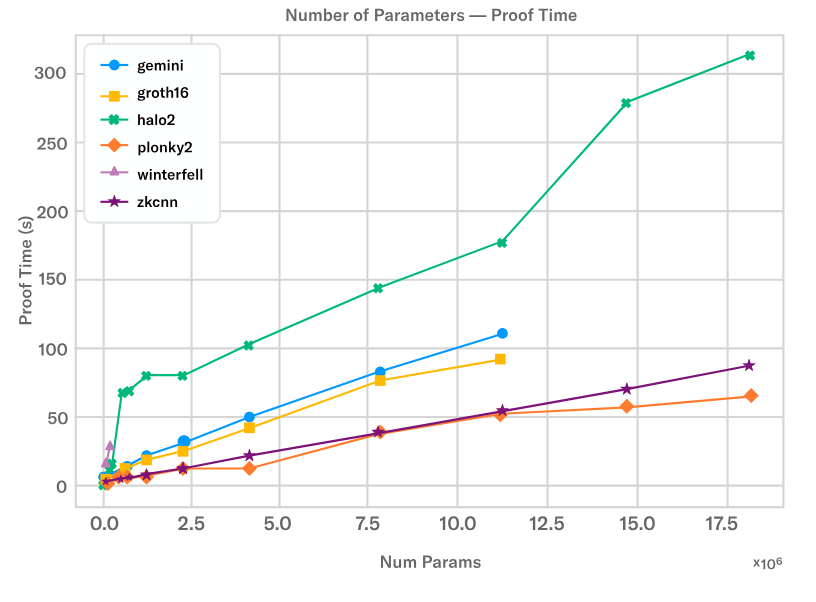

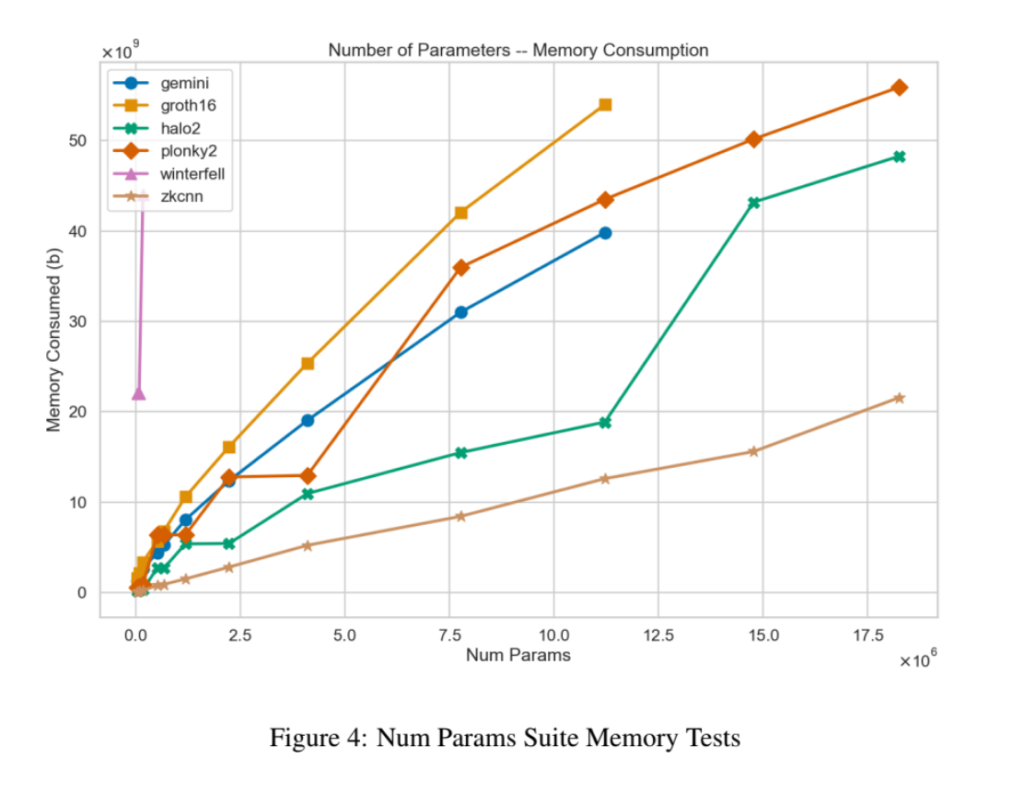

💡 There are 4 foundational metrics in any zero-knowledge proof system:

- Proof Generation Time: Time to create a proof for an AI inference.

- Peak Prover Memory Usage: Maximum memory needed for proof generation.

- Verifier Runtime: Time for the verifier to check the proof’s validity.

- Proof Size: Amount of data the proof requires.

Efficient systems aim for quick proofs, low memory use, fast verification, and compact proofs, crucial for applications like AI inference.

Numerous teams are dedicated to enhancing ZK technology through various means, ranging from optimizing hardware to refining proof system architecture. For comprehensive resources related to ZKML, please refer to the ZKML community’s repository awesome-zkml on GitHub.

One of a many initiatives that are working on improving the ZKML systems is Jason Morton’s EZKL library which allows transforming machine learning models into ZK-SNARK circuits. This library is compatible with ONNX models. These ZK-SNARK circuits can be integrated into a ZKP framework. Currently, EZKL has the capability to verify models of up to 100 million parameters.

💡 ONNX is a standardized format for exchanging neural network models across different frameworks and platforms.

ONNX is like a translator for computers that helps them share their smart ideas (machine learning models) with each other, so they can all work together and learn faster.

💡 A circuit is a setup of interconnected logic gates that perform operations on inputs to produce outputs. In ZK-SNARK, circuits represent computations, enabling concise proofs of correctness without revealing details.

You’ve got a secret cake recipe you want to prove you know without telling the recipe. A ZK-SNARK uses steps for things like mixing and baking, represented by symbols. You decode those symbols with special math. Your friend checks your answers using the same math, but can’t find out the recipe. So, ZK circuits confirm your knowledge without giving away the secret recipe.

The Modulus Labs, a ZKML-focused startup, released a paper called “The Cost of Intelligence.” In this paper, they conducted an assessment of different ZK proof systems. This evaluation involved testing neural networks of various sizes (measured by the number of parameters) and their memory consumption, as depicted in the two figures presented below.

Source: “The Cost of Intelligence: Proving Machine Learning Inference with Zero-Knowledge.” Modulus Labs.

2.5.5. Attestations

Zero-knowledge proofs enable the incorporation of attestations from externally verified parties into on-chain models or smart contracts. Through the validation of digital signatures using these proofs, one can establish the genuineness and origin of the information. This is a second solution to the authenticity issue discussed in section 2.2.

The process works like this:

- Attestation Process: External verified parties create attestations, which are statements that confirm the accuracy of certain information. For example, an attestation might state that a specific image was taken at a particular location and time.

- Digital Signatures: The verified parties attach digital signatures to their attestations. These signatures are generated using cryptographic methods, and they serve as a proof of the attestation’s authenticity and the verified party’s identity.

- Zero-Knowledge Proofs: Used in blockchain or smart contracts, they validate signed attestations without disclosing content.

- Ensuring Authenticity and Provenance: By using ZKPs to verify the digital signatures on the attestations, the system can confirm that the information comes from a trusted source and has not been tampered with.

2.5.6. Legal Discovery

ZKML provides a valuable solution for conducting audits and legal discovery processes while safeguarding sensitive information, analogous to the principles employed in KYC procedures. This innovative approach allows auditors and investigators to validate the accuracy and adherence of machine learning models without direct access to the original data. This not only ensures the preservation of data privacy but also facilitates compliance with regulatory standards.

2.5.7. Proof of Personhood

Proof of Personhood is a method to verify someone’s identity without revealing personal information. It involves a secure verification process, followed by the creation of a “proof” using zero-knowledge proofs (ZKPs). This proof confirms the person’s verified status without disclosing any details about their identity. This approach enhances privacy and security while ensuring the uniqueness of the individual. The proof can be used for various purposes without compromising anonymity.

Worldcoin recently introduced a privacy-first, decentralized and permissionless identity protocol, known as World ID, to help establish PoP on a global scale.

3. The Future of AI and Blockchain

In conclusion, as we delve deeper into the realm of AI and Web3 fusion, it becomes increasingly evident that the harmonious convergence of these two transformative technologies holds the key to unlocking unprecedented possibilities. The intricate interplay between AI’s intelligence amplification and Web3’s decentralized coordination paves the way for a future where innovation, transparency, and efficiency flourish across industries. By embracing this synergy, we embark on a journey that promises to reshape the landscape of technology and propel us into a new era of limitless potential and boundless opportunities.

4. References

Precedence Research (2022) Artificial intelligence market size to surpass around US$ 1,597.1 bn by 2030, GlobeNewswire News Room. Available at: https://www.globenewswire.com/news-release/2022/04/19/2424179/0/en/Artificial-Intelligence-Market-Size-to-Surpass-Around-US-1-597-1-Bn-By-2030.html (Accessed: 21 August 2023).

(No date) Geometry research. Available at: https://geometry.xyz/notebook/functional-commitments-zk-under-a-different-lens (Accessed: 21 August 2023).

Blockchain AI market size – global analysis, forecast 2021-30 (no date) Spherical Insights. Available at: https://www.sphericalinsights.com/reports/blockchain-ai-market (Accessed: 21 August 2023).

Order, N. (2023) Crypto’s next frontier: Ai & defi, Medium. Available at: https://medium.com/neworderdao/cryptos-next-frontier-ai-defi-15d46ec78e40 (Accessed: 21 August 2023).

Burger, E. (2023) Checks and balances: Machine learning and Zero-knowledge proofs, a16z crypto. Available at: https://a16zcrypto.com/posts/article/checks-and-balances-machine-learning-and-zero-knowledge-proofs/ (Accessed: 21 August 2023).

An Introduction to zero-knowledge machine learning (ZKML) (no date) Worldcoin. Available at: https://worldcoin.org/blog/engineering/intro-to-zkml (Accessed: 21 August 2023).

Labs, M. (2023) Chapter 5: The cost of intelligence, Medium. Available at: https://medium.com/@ModulusLabs/chapter-5-the-cost-of-intelligence-da26dbf93307 (Accessed: 21 August 2023).